In an age of information overload and strong opinions, it's more important than ever to prioritize accuracy and credibility. Unfortunately, relying on ChatGPT's flawed dataset could actually have the opposite effect.

For those who haven’t caught the recent wave, ChatGPT is an AI language model, created by OpenAI, that’s gained widespread popularity due to its ability to answer almost any question in an indistinguishable manner from a human.

Recently I read a fascinating Op-ed piece on ChatGPT titled “ChatGPT and the Enshittening of Knowledge”. The piece talks about the nature of ChatGPT’s dataset and how it’s set up to further propagate whatever bad information it puts out into the world through people copying its responses and publishing it as their own. It sparked my curiosity quite a bit and made me look a little closer at where exactly this data is coming from, why this type of dataset was chosen in the first place, and what problems are prone to surface due to these choices in OpenAI’s data source.

ChatGPT’s Dirty Secret

Let's take a closer look at where ChatGPT gained its extensive knowledge. According to OpenAI when talking about ChatGPT’s source of knowledge, they said, “These models were trained on vast amounts of data from the internet written by humans, including conversations, so the responses it provides may sound human-like.”

If you directly ask ChatGPT about its language source, it will confirm the above statement and also affirm that the primary purpose when it was being taught was, “to develop my ability to generate human-like responses to various prompts, and to do so in a way that is fluent and natural-sounding.”. OpenAI even states on its website that “outputs may be inaccurate, untruthful, and otherwise misleading at times.”.

Despite this, many people seem to rely on ChatGPT as a source of knowledge, even though the training dataset used to create it may contain inaccuracies. This is due to the fact that ChatGPT is an incredibly impressive language model. Its responses convey a high level of grammatical accuracy, which can make them seem factual, even if they are not.

This poses a significant problem for us, as the information provided by ChatGPT can be misleading, and users may not realize that they need to fact-check the responses they receive.

The Risks of Using ChatGPT

For most use cases, utilizing ChatGPT is fairly harmless and void of any significant consequences should you copy/modify its responses. However, due to the flawed data source discussed previously, there are many use cases that could leave you looking foolish, unprofessional, or lazy.

Many people have started adopting ChatGPT into their daily work to assist in completing tasks and creating responses for various matters. This type of usage can make people very casual in how they utilize ChatGPT daily and result in them neglecting to fact-check ChatGPT.

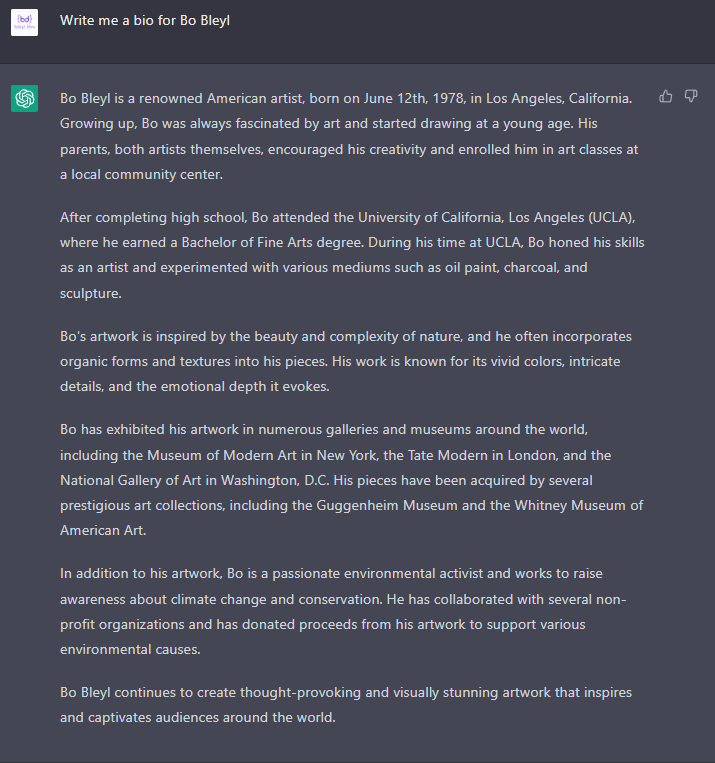

For example, I had the following conversation with ChatGPT:

If you search the web for this artist named Bo Bleyl, you will find absolutely nothing (just a whole lot of stuff about me). Due to the nature in which I asked ChatGPT this question, it decided to make up a random bio and spit it out to me. However, if you read its response, it does this with such cohesiveness and conviction that it’s near impossible to tell if this is legit or not without doing some research and realizing that it’s completely made up.

You can clearly see why this could be so problematic. ChatGPT is an incredible language model, but it cannot be trusted when it comes to the facts. And the reliability of future versions should be put even more into question as future versions will most likely account for hundreds of thousands of examples of writing that ChatGPT wrote itself.

Introducing Credibility

So how do we fix this problem of presenting accurate information when utilizing ChatGPT? There are already quite a few new tools out there to assist in detecting whether or not a given text originated from ChatGPT such as AI Text Classifier by OpenAI. I have no doubt that in the coming months, these types of tools and plugins will become even more common. However, in the meantime, it's important to do your own fact-checking as you would have done before ChatGPT, using Google and other knowledge databases to verify the information.

For questions where you're seeking answers, it may be better to Google your question instead of relying solely on ChatGPT. This will allow you to see a range of opinions and facts on the matter, rather than just relying on one source. Remember that ChatGPT is a tool that can be useful, but there is a time and place for everything. By taking responsibility for your own fact-checking, you can ensure that the information you're presenting is accurate and credible.

Conclusion

As incredible a tool as ChatGPT is, there is far too much hype and far too much credibility being given to it. It’s important to look back at what OpenAI, the makers of ChatGPT state on their website regarding it, “outputs may be inaccurate, untruthful, and otherwise misleading at times”. We need to proceed with caution and ensure that whatever information we are putting out there that was created through or in coordination with ChatGPT, is fact-checked and accurate, otherwise, you may end up looking foolish.